Winter School - 2022

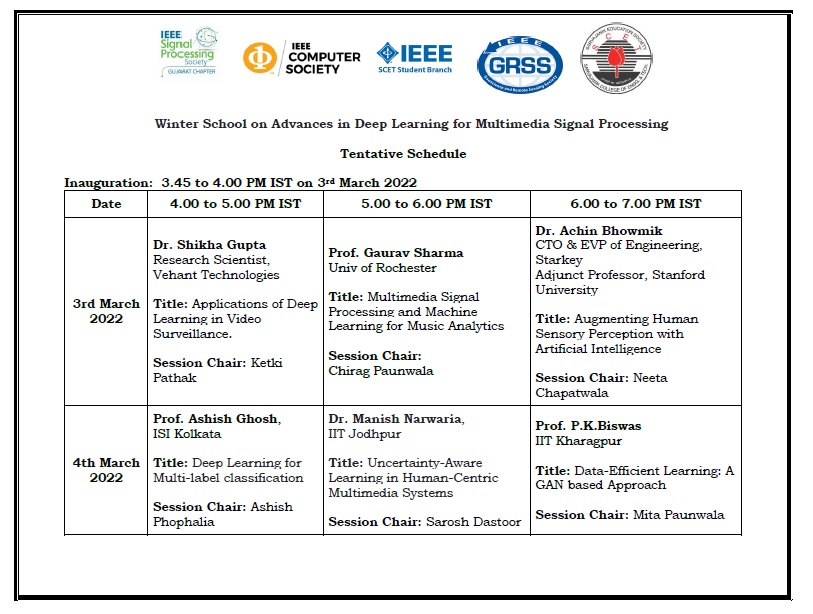

Winter School on Advances in Deep Learning for Multimedia Signal Processing

3rd to 5th March 2022

The remarkable progress in the field of deep-learning-based multimedia systems has encouraged researchers to propose various deep architectures for a variety of applications. The winter school will be focused on proposing the methods to encode the complicated quality-related feature cues into the current deep quality models, creating the advanced datasets for training a deep model, and proposing the methods to systematically develop datasets, benchmarks, and evaluation platforms for testing the deep model performance. Various deep neural networks, including feed-forward neural networks, convolutional neural networks, etc along with the applications of Geoscience and Remote Sensing using deep learning and AI/ML techniques will also be explored.

Webex Link:

Day1: https://ieeemeetings.webex.com/ieeemeetings/j.php?MTID=m53bf3620d2a55e7ac8dccf0f0404e8ac

Day2: https://ieeemeetings.webex.com/ieeemeetings/j.php?MTID=m95c073f653d39c14aa3320dda6b88199

Day3: https://ieeemeetings.webex.

General Chair

- Chirag Paunwala

Program Chair

- Ashish Phophalia

- Shiv Mohan

Organizing Chair

- Mita Paunwala

- Ketki Pathak

Organizing Committee

- Amit Nanavati

- Arpan Desai

- Nirali Nanavati

- Sarosh Dastoor

Technical Support

- Tejal Surati

|

Dr. Shikha Gupta Research Scientist at Vehant Technologies Title of Talk: Applications of Deep Learning in Video Surveillance. Abstract:Surveillance systems are widely utilized to monitor public and private locations. Understanding a 24*7 video stream for real-time detection of humans, vehicles, objects, attributes, and events is the new frontier of today’s artificial intelligence surveillance advancement. Artificial intelligence-based surveillance systems developed in the last few years are overcoming these limitations. They can process thousands of camera feeds and detect th Speaker BiographyDr. Shikha Gupta is a Research Scientist at Vehant Technologies. She received her Ph.D. degree in machine learning and computer vision from IIT Mandi. Before this, she has worked with Samsung Research Institute, TCS Research lab, and NIT Delhi. Her research interest lies in scene understanding, pattern recognition, and computer vision. Her research work has been disseminated in several journals and conferences, including IEEE, Elsevier, IJST, ACM TOMM, ICASSP, EUSIPCO, IJCNN, ICONIP, etc. Currently, she is actively working towards action recognition, video summarization, person attribute recognition, and video analytics tasks. |

|

Prof. Gaurav Sharma Abstract:Modern music performances are typically multimodal: while sound is the principal modality, video and imagery strongly supplement the audio and are key drivers of the popularity of music videos. Multimedia signal processing and machine learning can therefore be particularly valuable in the analysis of music. In this talk, we highlight several recent projects that synergistically combine analysis across multiple modalities to provide effective and powerful solutions for a number of problems in music analytics. We illustrate the ideas in the context of chamber music performances by creating a novel dataset that enables better objective assessment of solution approaches and then highlight how multimedia signal processing combined with machine learning can address problems such as source association, source separation, multi-pitch analysis, and vibrato detection and analysis. Finally, we highlight emerging directions and opportunities in this field. Speaker BiographyGaurav Sharma is a professor in the Departments of Electrical and Computer Engineering, Computer Science, and Biostatistics and Computational Biology, and a Distinguished Researcher in Center of Excellence in Data Science (CoE) at the Goergen Institute for Data Science at the University of Rochester. He received the PhD degree in Electrical and Computer engineering from North Carolina State University, Raleigh in 1996. From 1993 through 2003, he was with the Xerox Innovation group in Webster, NY, most recently in the position of Principal Scientist and Project Leader. His research interests include data analytics, cyber physical systems, signal and image processing, computer vision, and media security; areas in which he has 54 patents and has authored over 220 journal and conference publications. He served as the Editor-in-Chief for the IEEE Transactions on Image Processing from 2018-2020, and for the Journal of Electronic Imaging from 2011-2015 and currently serves on the editorial board for the Proceedings of the IEEE. He is a member of the IEEE Publications, Products, and Services Board (PSPB) and chaired the IEEE Conference Publications Committee in 2017-18. He is the editor of the Digital Color Imaging Handbook published by CRC press in 2003. Dr. Sharma is a fellow of the IEEE, a fellow of SPIE, a fellow of the Society for Imaging Science and Technology (IS&T) and has been elected to Sigma Xi, Phi Kappa Phi, and Pi Mu Epsilon. In recognition of his research contributions, he received an IEEE Region I technical innovation award in 2008. Dr. Sharma served as a 2020-2021 Distinguished Lecturer for the IEEE Signal Processing Society and is a recipient of the 2021 IS&T Raymond C. Bowman award. |

|

Mr. Achin Bhowmik CTO & EVP of Engineering, Starkey Adjunct Professor, Stanford University Title of Talk: Augmenting Human Sensory Perception with Artificial Intelligence AbstractThe human sensory perception system is profoundly remarkable. We sense and understand the world around us with incredible ease. However, as we age, our sensation, perception, and cognition systems generally degrade. Overexposure to harmful stimulus levels can also result in catastrophic impact to our sensory system. For example, according to the World Health Organization (WHO), about half a billion people live with disabling hearing loss. Approximately 40% of the population experience some form of balance difficulty due to degradation of the vestibular sensory system. WHO also estimates that at least a billion people globally live with vision impairment that are yet to be addressed. Sustained sensory deprivations have been associated with cognitive declines. Speaker BiographyDr. Achintya K. (Achin) Bhowmik is the chief technology officer and executive vice president of engineering at Starkey Hearing Technologies, a privately-held medical devices company with over 5,000 employees and operations in over 100 countries worldwide. In this role, he is responsible for the company’s technology strategy, global research, product development and engineering departments, and leading the drive to transform hearing aids into multifunction wearable health and communication devices with advanced sensors and artificial intelligence. Previously, Dr. Bhowmik was vice president and general manager of the Perceptual Computing Group at Intel Corporation, where he was responsible for the R&D, engineering, operations, and businesses in the areas of 3D sensing and interactive computing, computer vision and artificial intelligence, autonomous robots and drones, and immersive virtual and merged reality devices. Dr. Bhowmik serves on the faculty of Stanford University as an adjunct professor at the Stanford School of Medicine, where he advises research and lectures in the areas of cognitive neuroscience, sensory augmentation, computational perception, and intelligent systems. He has also held adjunct and guest professor positions at the University of California, Berkeley, Liquid Crystal Institute of the Kent State University, Kyung Hee University, Seoul, and the Indian Institute of Technology, Gandhinagar. Dr. Bhowmik is a member of the board of trustees for the National Captioning Institute, board of directors for OpenCV, board of advisors for the Fung Institute for Engineering Leadership at the University of California, Berkeley, and industry advisory board for the Institute for Engineering in Medicine and Biomedical Engineering at the University of Minnesota. He is also on the board of directors and advisors for several technology startup companies. His awards and honors include Fellow of the Institute of Electrical and Electronics Engineers (IEEE), Fellow and President-Elect of the Society for Information Display (SID), Healthcare Heroes award from the Business Journals, Industrial Distinguished Leader award from the Asia-Pacific Signal and Information Processing Association, IEEE Distinguished Industry Speaker, TIME’s Best Inventions, Red Dot Design award, and the Artificial Intelligence Breakthrough award. He has authored over 200 publications, including two books and over 80 granted patents. Dr. Bhowmik and his work have been covered in numerous press articles, including TIME, Fortune, Wired, USA Today, US News & World Reports, Wall Street Journal, CBS News, BBC, Forbes, Bloomberg Businessweek, Scientific American, Popular Mechanics, MIT Technology Review, EE Times, The Verge, etc. |

|

Prof. Ashish Ghosh Title of Talk: CTO & EVP of Augmenting Human Sensory Perception with Artificial Intelligence AbstractMulti-label classification (MLC) is a generalization of the traditional single-label/multi-class classification. This lecture will be on the what, why and how of multi-label classification. To understand the basics, we will begin with “what” multi-label data classification is. Then, we will discuss “why” it is needed, focussing on some real-life application areas where it is used. Next, we will move on to “how” multi-label classification is performed, and highlight some popular ML classification models in the literature. Finally, some datasets, metrics relevant to ML classification will be discussed. Speaker BiographyStarting as an electronic engineer (1983-’87), Prof. Ghosh moved to computer science during his master’s |

|

Dr. Manish Narwaria AbstractMachine learning (ML) and Deep learning (DL) methods are popular in several application areas including multimedia signal processing. However, most existing solutions tend to penalize predictions that deviate from the target ground-truth values. In other words, uncertainty in the ground-truth data is largely ignored. This can lead to an unreasonable scenario where the trained DL model may not be allowed the benefit of doubt present in the data. The said problem becomes more significant in the context of more recent human-centric and immersive multimedia systems where user feedback and interaction are influenced by higher degrees of freedom (leading to higher levels of uncertainty in the ground truth). Thus, in this talk, I will outline how uncertainty in data can be exploited for better model optimization and validation. Speaker BiographyManish Narwaria is an Assistant Professor in the Department of Electrical Engineering at IIT Jodhpur. He received the Ph.D. degree in Computer Engineering from Nanyang Technological University, Singapore, in 2012. After that, he was a Researcher at LS2N (formerly IRCCyN-IVC), Nantes, France. His major research interests include the area of multimedia signal processing with focus on perceptual aspects toward content capture, processing, and transmission. |

|

Prof. P.K.Biswas IIT Kharagpur Title of Talk: Data-Efficient Learning: A GAN based Approach AbstarctGenerative Adversarial Network (GAN) is a relatively recent concept in deep generative learning wherein two neural networks are pitted against each other in a zero-sum non-cooperative game to match a non-stationary distribution to an intractable stationary distribution. In this lecture we shall talk about how the generative modelling capability of GAN can be exploited to design efficient frameworks for image/video inpainting. Semantic inpainting refers to realistically filling up large holes on an image or video frame. Our approach is to first train a generative model to map a latent noise distribution to natural image manifold and during inference time, search for the best-matching noise vector to reconstruct the signal. The primary drawback of this approach is its inference time iterative optimization and lack of photo-realism at higher resolution. In this talk both of the above mentioned shortcomings are addressed. This is made possible with a nearest neighbor search based initialization (instead of random initialization) of the core iterative optimization involved in the framework. The concept is extended for videos by temporal reuse of solution vectors. Significant speedups of about 4.5-5x on images and 80x on videos is achieved. Simultaneously, the method achieves better spatial and temporal reconstruction qualities. Speaker BiographyProf. Prabir Kumar Biswas received his B.Tech., M.Tech., and Ph.D. degrees in |

|

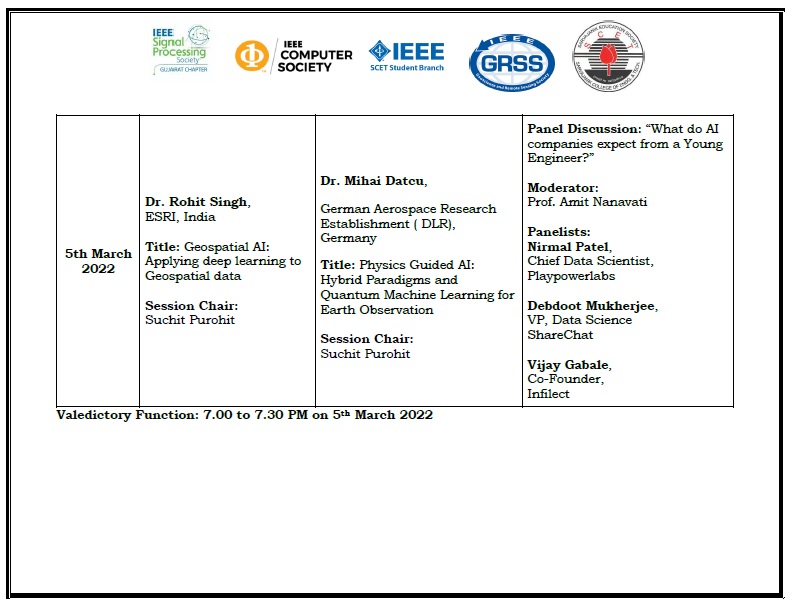

Mr. Rohit Singh Director, ESRI, India, New Delhi Title of Talk:Geospatial AI: Applying deep learning to Geospatial data AbstractThis session will showcase the application of AI to different types of Geospatial datasets. Attend this session to learn about deep learning, how it can be applied to GIS, the different types of geospatial deep learning models, and how you can train and use them with ArcGIS. This session will showcase the application of ArcGIS’s AI capabilities with imagery and video (object detection, pixel classification and object classification, image to image translation, edge detection, and change detection); unstructured text; feature, tabular, and time series data; and 3D point clouds and meshes. Speaker BiographyRohit Singh is the director of Esri’s AI research and development labs in New Delhi, India. He is passionate about Geospatial AI, Machine Learning & Deep Learning and bringing them to GIS. Rohit leads by example and empowers his team of talented data scientists, software developers and product engineers to build the next generation of geospatial data science products. This awesome team is working on cutting edge technologies and making groundbreaking advances in Geospatial AI and the next generation of Enterprise GIS. Rohit possesses superior leadership, team building, communication and interpersonal skills. Rohit cares deeply about API design, and has conceptualized, designed and developed the ArcGIS API for Python and ArcGIS Engine Java API. He loves solving complex engineering problems and his innovations underpin several product families in the ArcGIS platform including ArcObjects Java and ArcGIS Enterprise on the Linux platform. Rohit has worked at all levels of the software stack from assembly code and device drivers to desktop applications, web apps to mobile, and cloud technologies to deep learning. Conceptualizing software products and seeing them through to completion is a recurring theme in his life. Rohit is a graduate of Indian Institute of Technology, Kharagpur, and has worked at computer vision startups, TCS and IBM before joining Esri. He has been recognized as an Industry Distinguished Lecturer for the IEEE- Geoscience and Remote Sensing Society (GRSS) and serves as a plenary speaker at several international conferences. |

|

Dr. Mihai Datcu, German Aerospace Research Establishment ( DLR), Germany Speaker Biography:Mihai Datcu received the M.S. and Ph.D. degrees in electronics and telecommunications from the University Politehnica of Bucharest (UPB), Bucharest, Romania, in 1978 and 1986, respectively, and the habilitation a Diriger Des Recherches degree in computer science from the University Louis Pasteur, Strasbourg, France, in 1999. Since 1981, he has been a Professor with the Department of Applied Electronics and Information Engineering, Faculty of Electronics, Telecommunications and Information Technology, UPB. Since 1993, he has been a Scientist with the German Aerospace Center (DLR), Wessling, Germany. His research interests include explainable and physics aware Artificial Intelligence, smart radar sensors design, and qauntum machine learning with applications in Earth Observation. He has held Visiting Professor appointments with the University of Oviedo, Spain, the University Louis Pasteur and the International Space University, both in Strasbourg, France, University of Siegen, Germany, University of Innsbruck, Austria, University of Alcala, Spain, University Tor Vergata, Rome, Italy, University of Trento, Italy, Unicamp, Campinas, Brazil, China Academy of Science (CAS), Shenyang, China, Universidad Pontificia de Salamanca, campus de Madrid, Spain, University of Camerino, Italy, the Swiss Center for Scientific Computing (CSCS), Manno, Switzerland. From 1992 to 2002, he had an Invited Professor Assignment with the Swiss Federal Institute of Technology (ETH Zurich), Switzerland. Since 2001, he had been initiating and leading the Competence Center on Information Extraction and Image Understanding for Earth Observation, Paris Institute of Technology, ParisTech, France, a collaboration of DLR with the French Space Agency (CNES). He has been a Professor holder of the DLR-CNES Chair at ParisTech. He has initiated the European frame of projects for image information mining (IIM) and is involved in research programs for information extraction, data mining and knowledge discovery, and data science with the European Space Agency (ESA), NASA, and in a variety of national and European projects. He is the Director of the Research Center for Spatial Information, UPB. He is a Senior Scientist and the Data Intelligence and Knowledge Discovery Research Group Leader with the Remote Sensing Technology Institute, DLR and delegate in the DLR-ONERA Joint Virtual Center for AI in Aerospace. He is member of the ESA Working Group Big Data from Space and Visiting Professor withe ESA’s Φ-Lab. He was the recipient the National Order of Merit with the rank of Knight, for outstanding international research results, awarded by the President of Romania, in 2008, and the Romanian Academy Prize Traian Vuia for the development of the SAADI image analysis system and his activity in image processing, in 1987, he was awarded the Chaire d’excellence internationale Blaise Pascal 2017 for international recognition in the field of data science in earth observation, and the 2018 Ad Astra Award for Excellence in Science. He has served as a Co-organizer for international conferences and workshops and as Guest Editor for a special issues on AI and Big Data of the IEEE and other journals. He is representative of Romanian in the European Space Agency (ESA) Earth Observation Program Board (EO-PB). He is IEEE Fellow. |

Click Below to Register:

Winter School on “Advances in Deep Learning for Multimedia signal Processing (ADL-MSP) 03-05 March 2022

Moderator

| Amit Nanavati Professor, Ahmedabad University Biography:

Amit A. Nanavati is a Professor at Ahmedabad University and an Adjunct Professor at IIT Delhi. Prior to this, Amit was a Senior Technical Lead for Cognitive Automation Solutions at IBM GTS Innovation Labs (2016-21), and a part of IBM Research (2000-16). His research interests lie in network science and data science. Amit has co-authored a book on “Speech in Mobile and Pervasive Environments” published by John Wiley, UK in 2012. He has over 60 US patents granted and over 60 publications. He became an ACM Distinguished Speaker in 2014, and an ACM Distinguished Scientist in 2015. |

Panelists

| Nirmal Patel Chief Data Scientist, Biography:

Nirmal builds innovative technologies to solve challenging problems in modern education. He has fifteen years of hands-on experience spanning across learning science, data science, AI, and programming. Nirmal heads AI and Data Science research at Playpower Labs – an EdTech Services Company that provides AI, Product Design, Data Science, and Technology consulting services to educational organizations. Playpower has done more than 50 successful projects with partners like Pearson K-12, Carnegie Learning, HMH, BrainPOP, AltSchool, and India’s Ministry of Education. |

| Debdoot Mukherjee VP, Data Science Biography:Debdoot is passionate about applying science to turn data into products. Over a career of 12+ years, he has been involved in a variety of roles in research, engineering management and technical architecture. Debdoot leads the AI team at ShareChat, India’s fastest growing social media platform and Moj, India’s #1 short video app. They are building a world class team of ML scientists and engineers in the areas of Feed ranking, Ad |

| Vijay Gabale Co-Founder, Biography:

Vijay is the co-founder of a retail-focused AI enterprise (B2B) https://infilect.com operating out of Bangalore, India. Infilect is solving the problem of optimising of supply-chain for the worldwide retail industry, with its focus on retail visual intelligence. Infilect specifically helps retail brands and retailers solve out of stock, over stocking, product shrinkage, counterfeit products etc. Fundamental to the sustainable human growth is this basic economic principle that humans need to optimally utilise natural resources in order to produce and consume, and help themselves survive, earn, and grow. Vijay’s philosophy is to build products that solve fundamental and large-scale problems in order to optimise the use of natural resources, and directly but positively impact this cycle of production and consumption. Vijay believes that software and AI hold the key to solve these problems with an efficiency and effectiveness that the world has never seen before. |